🔒Locking Down Mastodon: Cilium Network Policies & Audit Mode

The Goal: Defense in Depth#

Running Mastodon on my bare metal cluster exposes it to the wider internet for federation. If the Mastodon container were ever compromised (RCE, supply chain attack, etc.), I need to ensure the attacker cannot use it as a jumpbox to scan my internal home lab network or access sensitive data stores (Postgres/Redis) indiscriminately.

The objective was to implement a Zero Trust model using Cilium Network Policies (CNP):

- Ingress: Only allow traffic from the Cloudflare Tunnel.

- Egress: Allow federation (public internet) but strictly block access to private RFC1918 ranges (LAN).

- Infrastructure: Explicitly whitelist dependencies (DNS, Postgres, MinIO, Redis).

Phase 1: Safe Deployment with Policy Audit Mode#

Applying network policies in a live environment is risky. To avoid breaking federation or user access, I utilized Cilium's PolicyAuditMode. This allows traffic to pass but logs what would have been dropped.

I wrote a script to automate enabling this mode, as it requires toggling a flag on the specific Cilium endpoint ID corresponding to the Mastodon pods.

Script: enable_audit.sh This script iterates through Mastodon pods, finds their hosting node and Cilium Endpoint ID (cep), and executes the cilium-dbg command inside the relevant Cilium agent.

# Core logic from enable_audit.sh

kubectl exec -n kube-system "$CILIUM_POD" -c cilium-agent -- cilium-dbg endpoint config "$EP_ID" PolicyAuditMode=Enabled# Core logic from enable_audit.sh

kubectl exec -n kube-system "$CILIUM_POD" -c cilium-agent -- cilium-dbg endpoint config "$EP_ID" PolicyAuditMode=Enabled

With Audit Mode enabled, I monitored the flows using Hubble CLI to identify necessary whitelists without causing downtime:

# Forward Hubble API

cilium hubble port-forward

# Observe traffic that would be dropped (Verdict: AUDIT)

hubble observe --to-label app.kubernetes.io/name=mastodon --verdict AUDIT -f

hubble observe --from-label app.kubernetes.io/name=mastodon --verdict AUDIT -f# Forward Hubble API

cilium hubble port-forward

# Observe traffic that would be dropped (Verdict: AUDIT)

hubble observe --to-label app.kubernetes.io/name=mastodon --verdict AUDIT -f

hubble observe --from-label app.kubernetes.io/name=mastodon --verdict AUDIT -f

Phase 2: Diagnosis & Challenges#

The MinIO "toServices" Trap#

One of the biggest hurdles was configuring access to the MinIO tenant. Initially, I attempted to use toServices to allow egress to the MinIO Service. However, traffic was still being blocked/audited.

The Fix: I switched from toServices to toEndpoints. Cilium operates primarily on identity (derived from labels). Using toEndpoints with the specific tenant labels provided the correct identity mapping for the policy engine.

Final working config snippet:

- toEndpoints:

- matchLabels:

k8s:io.kubernetes.pod.namespace: minio

v1.min.io/tenant: production

toPorts:

- ports:

- port: "9000"

protocol: TCP - toEndpoints:

- matchLabels:

k8s:io.kubernetes.pod.namespace: minio

v1.min.io/tenant: production

toPorts:

- ports:

- port: "9000"

protocol: TCP

Redis: Moving from IP to FQDN#

In previous iterations, I managed Redis access via CIDR blocks (static IPs). This was brittle; if the Redis service IP changed, the policy broke.

I leveraged Cilium's FQDN proxy feature to allow traffic based on the DNS name mastodon.redis.prod.nasreddine.com. This required ensuring the policy also allowed UDP/53 (DNS) so Cilium could snoop the DNS response and map the IP dynamically.

Phase 3: The Final Policies#

The solution is split into three logical policy files for maintainability.

1. Ingress (Cloudflare Only) Locks down the ingress so that only the Cloudflare Tunnel pods can talk to Mastodon's web ports (3000, 4000, 8080). This bypasses the need for a standard LoadBalancer exposure.

2. Egress (Federation vs. LAN) This is the most critical security boundary. It allows traffic to 0.0.0.0/0 (required for federating with other instances) but explicitly excludes internal private networks. This effectively jails the Mastodon instance, preventing it from talking to my router, other VMs, or the NAS.

egress:

- toCIDRSet:

- cidr: 0.0.0.0/0

except:

- 192.168.0.0/16

- 100.64.0.0/10 # K8s Pod/Service CIDRs

3. Infrastructure Core Whitelists the specific dependencies: DNS, Postgres, MinIO (via Endpoints), and Redis (via FQDN).

egress:

- toCIDRSet:

- cidr: 0.0.0.0/0

except:

- 192.168.0.0/16

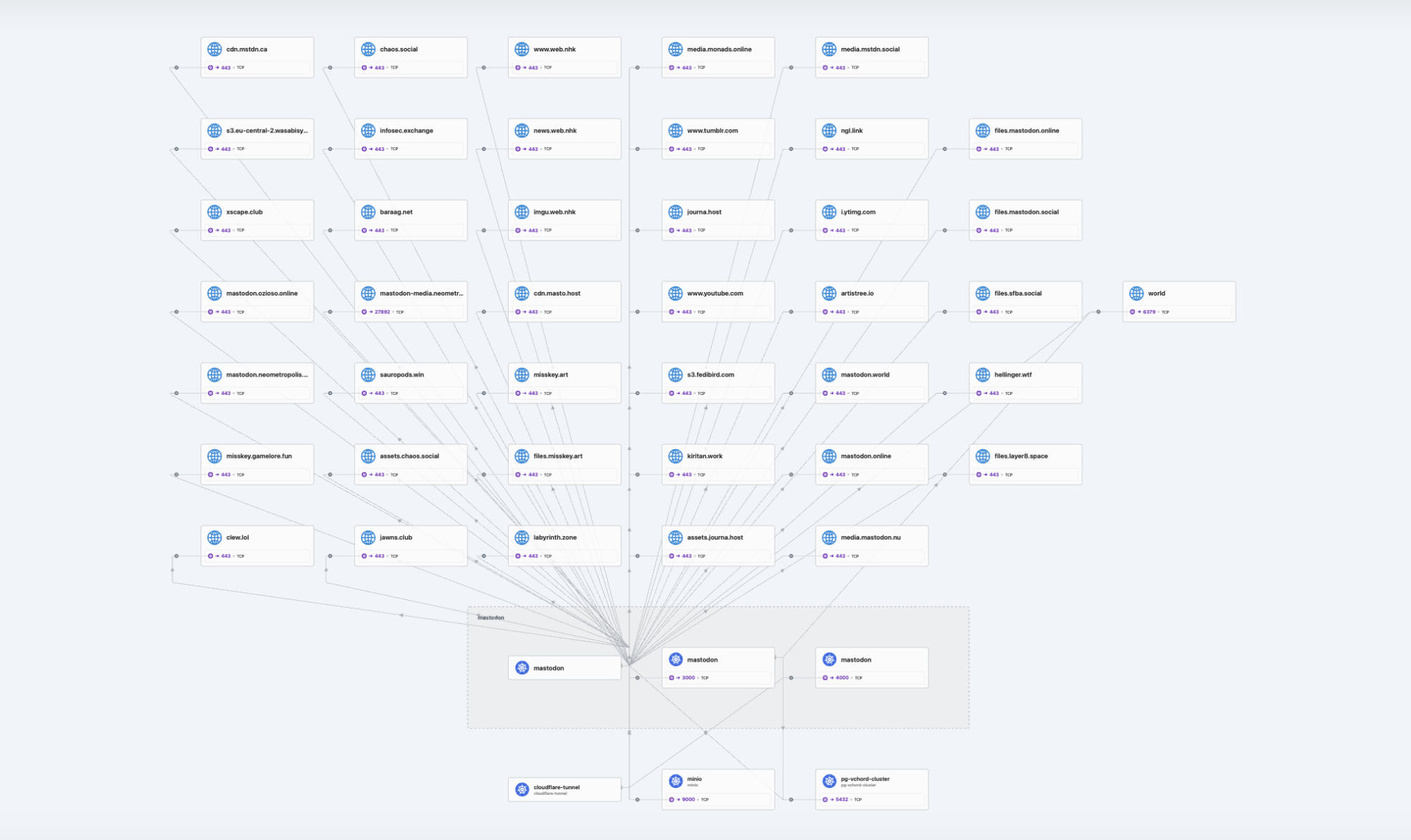

- 100.64.0.0/10 # K8s Pod/Service CIDRsVisualization#

The Hubble UI confirms the policy is working as intended. We can see the clear separation of allowed traffic flows and the enforced identities.